Most of the modern web applications provides real-time functionalities (“real-time web“) through a set of technologies and practices that enable users to receive information as soon as it is published by its authors, rather than requiring that they or their software check a source periodically for updates. Moreover, in very scalable and complex architectures, server-side code execution is often asynchronous. Just for example, let’s think to a task-based UI which submits a command like “book a plane ticket” to a web service. The server-side command processing could be performed after some hours: for example, the command could be just enqueued to a command-bus to be processed later. In scenarios like that, the client can’t count on an updated read model just after sending the command. As a consequence, in order to receive a feedback as soon as possible, all the involved clients should poll the server until the command execution reaches a significant state (e.g. in progress, completed, canceled etc.) and the read model is updated, ready for queries.

Before WebSockets, the classic implementations of this kind of real-time features were not so easy and they used to adopt strategies like forever frame (see “Comet“) or periodic/long polling. Today, all the modern browsers and web servers fully support WebSockets and they can extabilish bi-directional and persistent communications, so that a client can receive content through a “push” action performed by the server. In the ASP.NET world, SignalR is a growing new library that uses WebSockets under the covers when it’s available, and gracefully fallbacks to other techniques and technologies when it isn’t, while the application code stays the same. SignalR also provides a very simple, high-level API for doing server-to-client RPC (call JavaScript functions in clients’ browsers from server-side code) in an ASP.NET application, as well as adding useful hooks for connection management, e.g. connect/disconnect events, grouping connections, authorization.

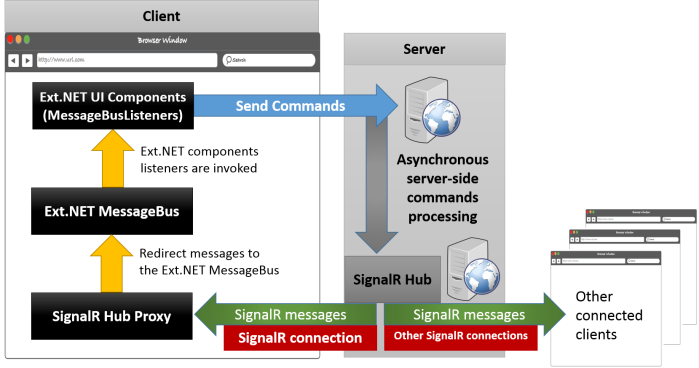

Developers that are currently using the Ext.NET component framework can take advantage on SignalR by combining it with the Ext.NET MessageBus component. The MessageBus provides a simple and robust client-side infrastructure to propagate notifications to listening UI components. The reference scenario I’m talking about in this post is represented in the figure below:

1. The client browser extabilishes a persistent connection to a server-side SignalR application hub. After that the client mantains a reference to an auto-generated hub proxy.

2. The Ext.NET UI components submits commands to the server.

3. At any time of the server-side command execution, the server can use the SignalR hub to push notification messages back to all the involved clients via RPC.

4. Any client receiving a SignalR message through the hub proxy redirects the message to the Ext.NET Message Bus

5. On the basis of the specific type of message, the Ext.NET UI components are updated through a message handler function. In fact, each Ext.NET component has a MessageBusListeners property (client side handlers of MessageBus client side events) and a MessageBusDirectEvents property (server side handlers of MessageBus client side events).

Let’s have a look to a minimalistic example implemented in an ASP.NET MVC web application. Here’s the view :

@using Ext.Net; @using Ext.Net.MVC; @{ Layout = null; var X = Html.X(); } <!DOCTYPE html> <html> <head> <title>SignalR and Ext.NET MessageBus example</title> @Scripts.Render("~/bundles/modernizr") @Scripts.Render("~/bundles/jquery") @Scripts.Render("~/bundles/jquery.signalR") <script src="@Url.Content("~/signalr/hubs")" type="text/javascript"></script> </head> <body> @(X.ResourceManager()) @(X.Viewport().Padding(20).Items() .Add(X.Button().Icon(Icon.Add).Text("New customer") .DirectClickAction("AddCustomer", "Customers")) .Add(X.Button().Icon(Icon.Delete).Text("Delete customer") .DirectClickAction("DeleteCustomer", "Customers")) .Add(X.GridPanel().Title("Customers").MarginSpec("10 0 0 0").Icon(Icon.User) .Store(X.Store() .AutoLoad(true) .Proxy(X.AjaxProxy().Url("/customers/all").Reader(X.JsonReader())) .Model(X.Model() .Fields(fields => { fields.Add(X.ModelField().Name("Id")); fields.Add(X.ModelField().Name("Name")); fields.Add(X.ModelField().Name("Surname")); fields.Add(X.ModelField().Name("Email")); }))) .ColumnModel(columnModel => { columnModel.Columns.Add(X.Column().Text("Name").DataIndex("Name").Flex(1)); columnModel.Columns.Add(X.Column().Text("Surname").DataIndex("Surname").Flex(1)); columnModel.Columns.Add(X.Column().Text("Email").DataIndex("Email").Flex(1)); }) .MessageBusListeners(new MessageBusListener { Name = "Customers.*", Handler = "this.getStore().reload();", Buffer = 500 })) .Add(X.GridPanel().Title("Events log").MarginSpec("10 0 0 0").Icon(Icon.Report) .Store(X.Store() .Model(X.Model() .Fields(fields => { fields.Add(X.ModelField().Name("EventId")); fields.Add(X.ModelField().Name("DateTime")); fields.Add(X.ModelField().Name("Name")); fields.Add(X.ModelField().Name("Data")); }))) .ColumnModel(columnModel => { columnModel.Columns.Add(X.Column().Text("EventId").DataIndex("EventId").Flex(1)); columnModel.Columns.Add(X.Column().Text("DateTime").DataIndex("DateTime").Flex(1)); columnModel.Columns.Add(X.Column().Text("Event name").DataIndex("Name").Flex(1)); columnModel.Columns.Add(X.Column().Text("Event data").DataIndex("Data").Flex(1)); }) .MessageBusListeners(new MessageBusListener { Name = "Customers.*", Handler = "this.getStore().add({ EventId: data.Id, DateTime: getFormattedDateTime(), Name: name, Data: \"CustomerId: \" + data.CustomerId });" }))) <script type="text/javascript"> var loadHub = function () { var hubProxy = $.connection.applicationHub; hubProxy.client.publish = function (name, message) { Ext.net.Bus.publish(name, message); }; $.connection.hub.start().done(function () { Ext.net.Notification.show({ title: "Info", iconCls: "#Accept", html: "SignalR connection is active!" }); }); }; $(document).ready(function () { loadHub(); }); </script> </body> </html>

As you can see, the view is composed by the following Ext.NET components:

– A couple of buttons which send commands to the server (e.g. Add/Remove a customer)

– A grid panel which holds the current customer data

– A grid panel which holds trace data about messages received through the SignalR connection.

The integration between the client-side SignalR hub proxy and the Ext.NET components MessageBus is done through the loadHub javascript function: it wraps the SignalR hub named “applicationHub” so that all the received messages are redirected to the Ext.NET MessageBus and again to the listening UI components. Please note that the SignalR “publish” function and the Ext.NET MessageBus “publish” function accept the same parameters: the message name and the message data. For this reason, the integration between the two worlds is practically natural.

In the example above, the Store of the former GridPanel is reloaded each time its MessageBusListener intercepts any message whose name starts with the prefix “Customers.” . Please pay attention to the Buffer property: it’s very useful when the component is under message storming and we want the UI to be refreshed just after a specified delay during which no messages have been received.

What about server-side code? Well, the server-side code is not so relevant in this post. The most important thing to be considered here is that at some point of the server-side command execution, the code retrieves the SignalR hub, selects which clients will receive the RPC (for simplicity, in this example a message is sent to all connected clients) and finally pushes a message containing the data the client needs for updating UI. Here an example:

GlobalHost.ConnectionManager.GetHubContext<ApplicationHub>() .Clients.All.publish("Customers.Added", new CustomerAdded { ...<data for clients>... });

Useful links